Every startup is rightly afraid of a new competition, especially if it comes from Internet giants like Google. The stories of how Google enters the market and dominates it in a few years are not new (such as mobile OS and Android, or recently browser wars with Chrome) . In some cases Google gets a slap on the wrist or occasional $2.7 billion fine . Nevertheless, the situation is not likely to improve and Google’s dominance in search will grow into other areas, if Google decides to compete there.

This blog post hopes to give an insight into the impact of prioritizing Google funded initiative over existing players in the market, using the real numbers from specifically our small business (which I am not sure is still right to call a startup after 10 years) 😊

But before I do so, let me give you a super quick overview of what my company – Speedchecker does – we provide easy to use and accurate speed test of your internet connection. After almost 10 years we have done over 300 million tests and provided speed test technology for many other companies.

Launching Google speed test

The story begins about a year ago when Google launched their own speed test featured directly in search results in USA and followed by other English speaking markets. We knew that the UK launch would happen eventually but we did not know when.

Luckily for us Google picked an open-data solution for running their speed test , noble M-Lab. M-Lab was founded by internet visionaries such as Vint Cerf and is funded by consortium of companies including Google. This choice enabled us to analyze the rollout and provided real numbers for this blog post.

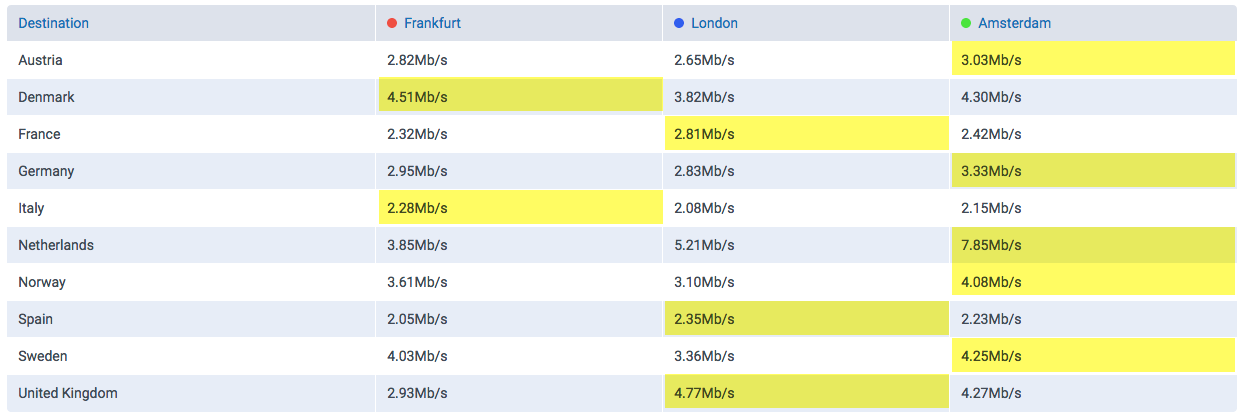

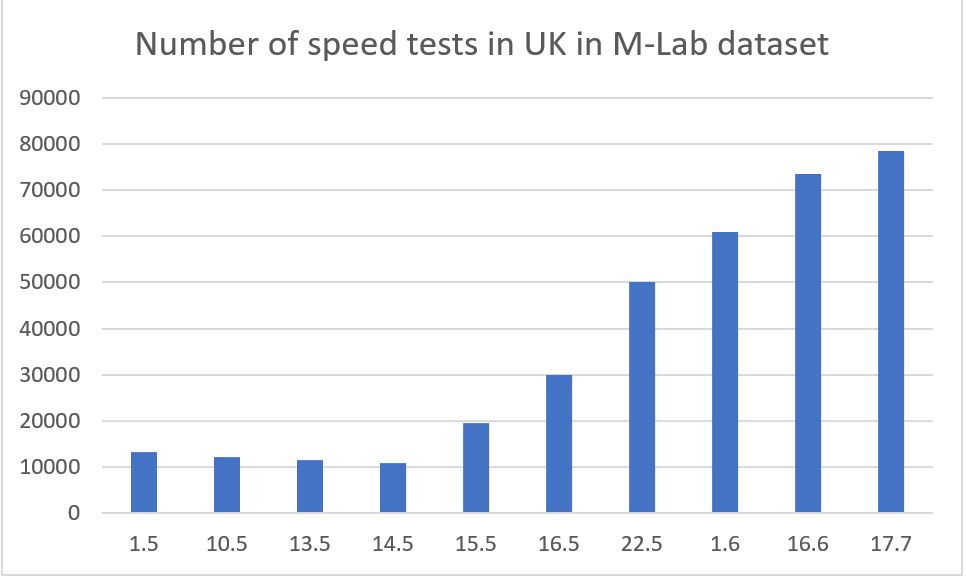

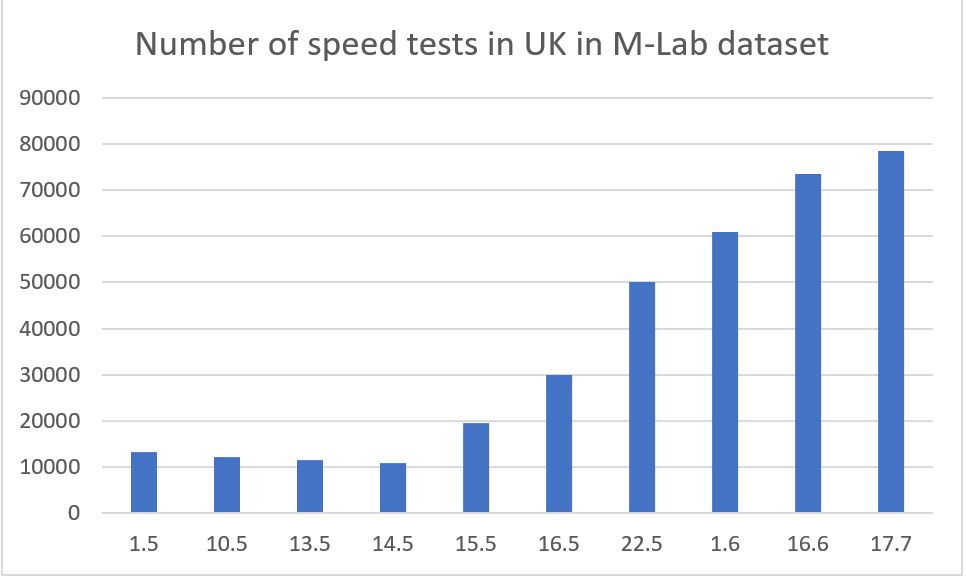

M-Lab speed test data is available to download for everyone through Google Cloud (of course). By analyzing volumes of data each day, we could produce following chart:

(Number of speed tests from UK in M-Lab dataset on random days in May, June and July 2017)

As we can see, Google started the rollout on the 15th of May. We can also observe that Google did not do an immediate rollout across all the UK users but over the course of several days, the feature was introduced to more and more users in the search results.

Impact on visitor numbers to our website

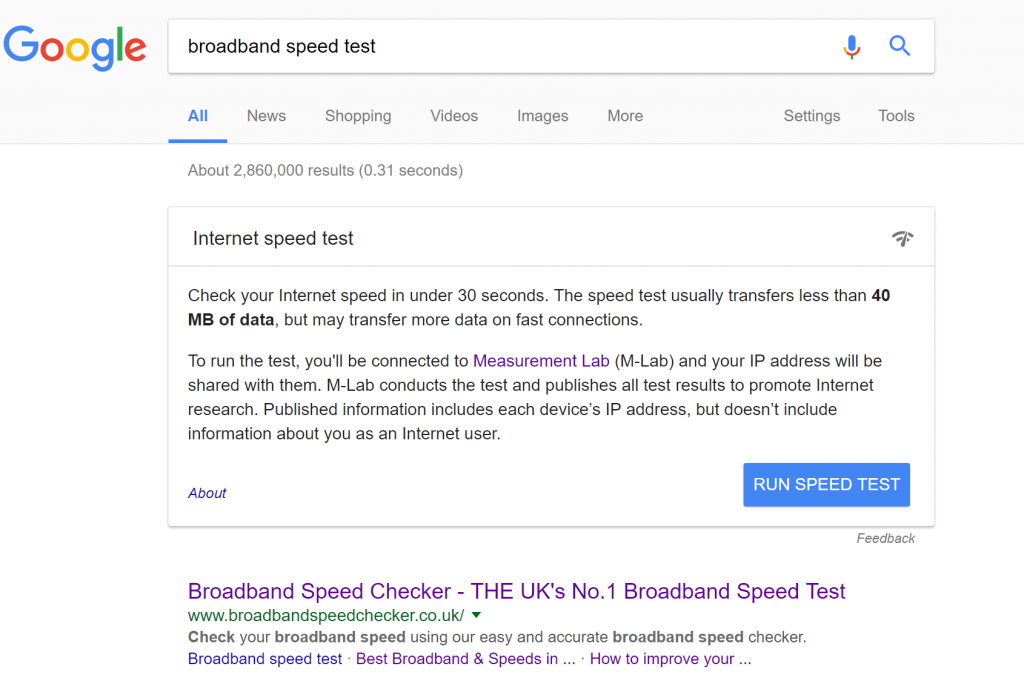

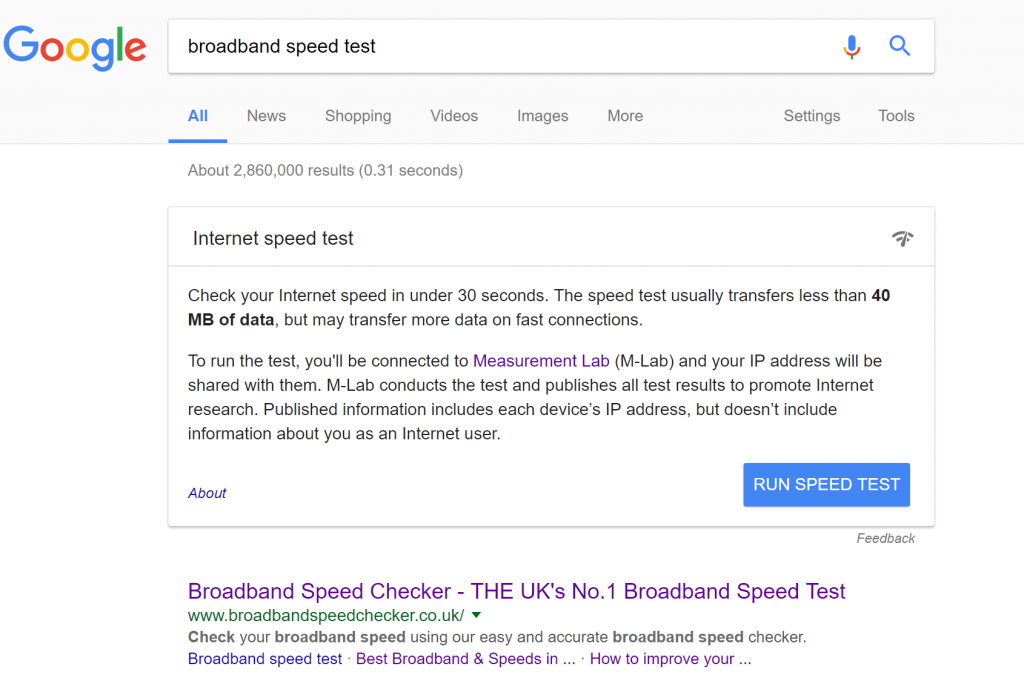

Here is how the search results look like in the UK for one of our main keywords:

As we can see Google speed test occupies significant space on the 1st page and pushes all results below.

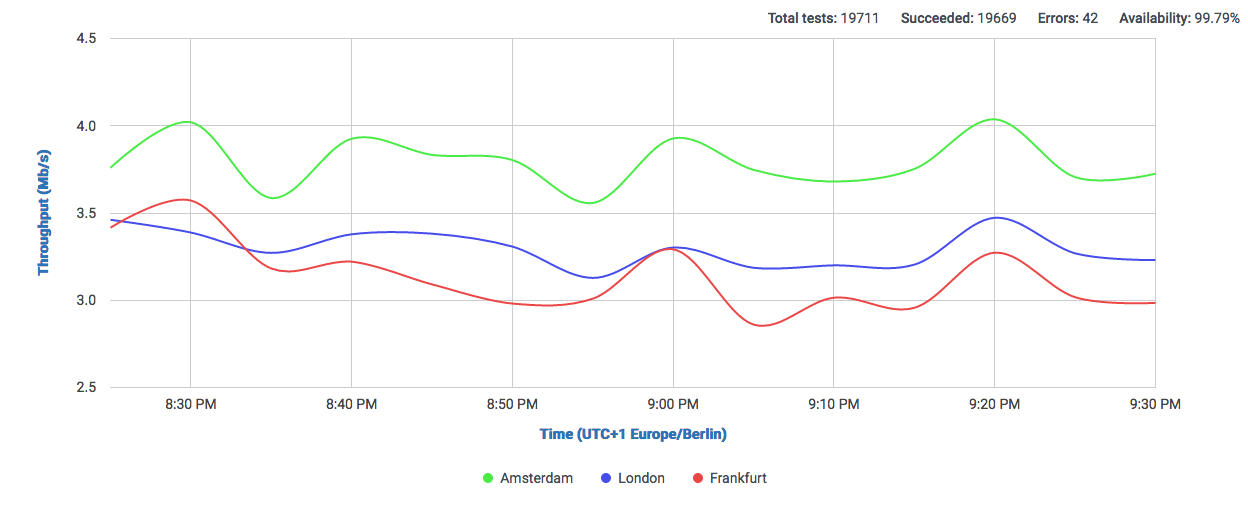

Here is the chart plotting user visits from Google (and Bing for comparison) before and after the Google speed test release. We can observe the drop in visitors begins after Google launches the speed test.

To better illustrate that the drop is because of ranking change and not seasonal factors, here is zoomed in data from Bing which does not show any meaningful change before/after 15th of May when Google launched.

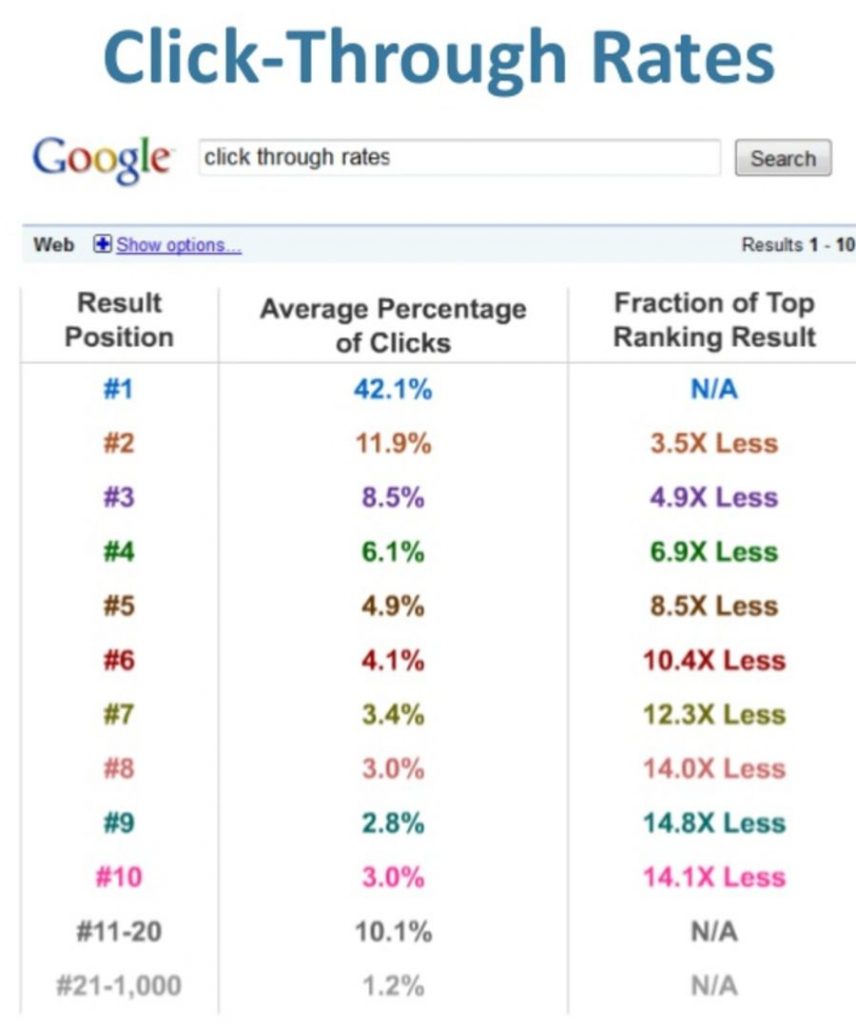

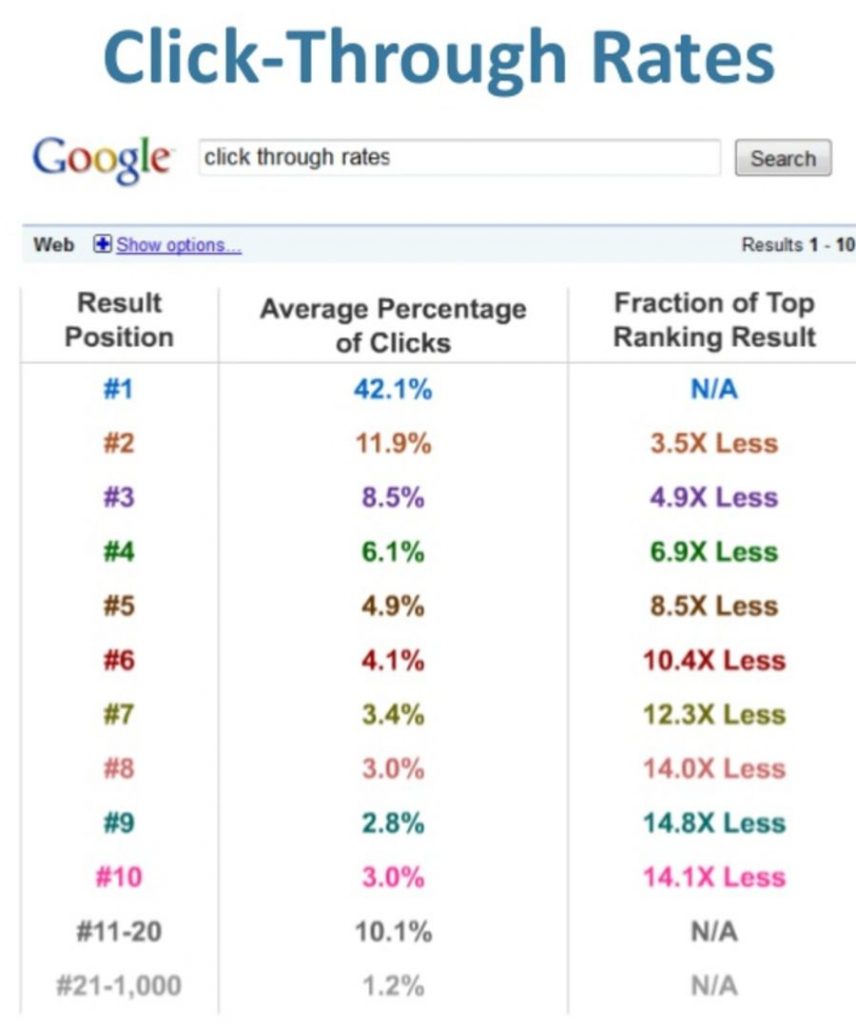

Looking at the average drops we can estimate the loss of about 5000 visits per day from 25000 Google visits. Overall that is about 20% traffic loss from being moved from position 1 to 2.

Comparing to industry standard data e.g. by RankScience, 20% drop is quite a good result, it could be worse.

From M-Lab dataset we can also extract quite interesting insights as it contains user IP address as well. If we cross-reference user IPs seen in M-Lab data with our internal data, we can see about 5% of users use both services. We can only speculate whether it’s a good result or not, definitely for the user it is useful to get information from 2 different sources and decide what is more relevant.

Conclusion

From our perspective we are quite happy the Google threat is not as serious as we originally thought. Loosing 10% of our overall traffic (and 20% of Google’s) will have impact on our bottom line but we will survive. Luckily, we provide other features that user’s appreciate such as mobile apps, storing results, mapping, comparisons and more. This I believe contributed heavily to such a small drop. I have no doubt many users will favor convenience of 1 click to get result in search results directly than going to 3rd party site such as ours. Unfortunately, there is nothing we can do to compete with that and stay in business at the same time.

With Google favoring their own speedtest, M-Lab datasets are growing at a rate of almost 1 million results per day and will achieve to serve as many customers in less than a year – something we have achieved in the last 10 years. That is the power of Google search dominance.